Self may be a convenient construct for intelligence in human packages...how could this change in the future?

Self may be a convenient construct for intelligence in human packages...how could this change in the future?

In the case of uploading, where a human is copied to a digital substrate (via digital capture of personality, value system, experience history, memory, thoughts, etc. and/or a full copy and simulation of all neurons and other brain functions), would the concept of self be different?

Self has at least two dimensions: corporeal and mental. First the distinction between my body and other objects. With my mind, I can direct the movement of my body. Without employing my body (or more advanced metaphysics) I cannot direct the movement of other objects. This physical agent is "my body" or "my self."

Second, the distinction between my mind and the minds of others. The tableau of my thinking is not overtly visible or perceivable to others. From communication with others, I have the perception that others have thoughts as well, and that they are not explicit to me.

In short, a working definition of self is the perception and control (agency) of the entity that I can direct vs. my surroundings.

In the case of an uploaded human copied to any other physical body with action, self-direction and movement capability, it would still be useful to have the concept of the physical agent self and the mental self, although in a highly functional partial self, a partial set of mental capabilities may be appropriate (an interesting ethical question). If the uploaded human is residing exclusively in digital storage files and does not have physical agency, the concept of the physical agent self would not be useful but the concept of the mental self may still be useful. The mental self concept may evolve in many directions, including the idea of different permissioned access tiers to the mind/processing core and other areas.

What would it be like to be an identity in digital storage without physical agency, particularly knowing that previously "you" had had physical agency? Lots of aspects of physical being can presumably be simulated, the usual pain, pleasure, etc. sensory experiences we are familiar with and probably some new ones. The more important aspect of physical agency relates to survival and ability to act on the surrounding environment for survival. The digital copy would need assurance and control over aspects of survival. This could be a complicated process but at the surface seems to mainly entail the access to a variety of power supplies, computing resources and backups.

Is a saved digital copy of a human alive? Probably not unless its being run. When a digital copy of a human is being "run," how is it known/shown that it is "alive?" As with any new area, definitions will be important. What is alive or not for a running simulation of a human is an interesting topic to be covered later. Here, it is assumed that there are some cases where a simulation is deemed to be alive and others where it is not.

There do not appear to be any ways in which a human simulation could be alive without having self-awareness in the current definition of being able to distinguish between itself and its environment at least mentally and in some cases physically. This analysis does point up the possibility of adapted or more rigorous definitions of being 'alive.'

So the concept of the corporeal and mental self may still be useful in the future but may likely be expanded upon and may exist as one of multiple metaphors for describing the agency of thought and action.

Tuesday, December 13, 2005

Durability of the convenient construct of self

Posted by LaBlogga at 2:35 PM 0 comments

Monday, December 12, 2005

Entities becoming conscious?

Is it accurate or anthropomorphic to attribute the possibility of eventual self-awareness and consciousness to multi-agent entities such as corporations, governments and mountain ranges? Anthills and beehives have not-fully explained collective behaviors, but after years of evolution, the only place they become conscious is in sci fi.

Is it accurate or anthropomorphic to attribute the possibility of eventual self-awareness and consciousness to multi-agent entities such as corporations, governments and mountain ranges? Anthills and beehives have not-fully explained collective behaviors, but after years of evolution, the only place they become conscious is in sci fi.

Conscious entities are one flavor of FutureFear, not only the possibility that computers (AIs) become conscious and potentially take over the world but also that large currently (as far as we know) non-self-aware entities become self-aware and conscious and potentially take over the world. There is the further complication that humans may not be able to perceive other higher forms of consciousness, and that it possibly goes unnoticed for sometime until the physical structure of the visible world changes and is controlled by something else, analogous in a basic way to the ape's eye-view of human evolution.

The logic is the evolutionary lens that produced axons and synapses that alone are not self-aware, but together are, will continue expanding and evolving consciousness, possibly jumping to occupy other substrates. Culture is a key evolutionary differential component initially developed by humans. Culture is continually evolving. If memes not genes are the basis for competitive transmission and survival then the speed of cultural evolution is superseding human evolution and more complex collective organizations of groups of people and potentially other entities may indeed "wake-up" and become self-aware and conscious. As life is an emergent property of chemicals, and consciousness is an emergent property of life, there may be no end to the meta levels up; it is hard to argue to the contrary.

If this were to be the case in the instance of corporations and governments, for example, initially (like we see now for meme transmission) there must be a symbiosis, or really a dependence of the meme, government or corporation on the human substrate. Memes can evolve to having a machine-based substrate as AIs emerge (and of course conscious entities as another substrate), corporations can evolve to having electronic markets and true drone robots as their primary substrate, governments are an interesting case but the substrate could be machine/virtually based with people still as the governed.

If they wanted to continue to participate in a society of human individuals as would be wise initially, these entities, like AIs and the genetically enhanced apes of some sci fi stories, would start arguing that they are self-aware, self-organizing, self-replicating, evolving, living, conscious entities and demand the pursuant legal rights.

Posted by LaBlogga at 1:38 PM 0 comments

Saturday, December 10, 2005

More than emergence needed for Physics break-through

Basic science, especially physics, seems stalled in a variety of ways. String Theory is likely not the answer. Particle accelerators are too big, too expensive ($5B) and take too long to build and use; they are a clumsy approach, just the only current approach. With computing improvements, hopefully physics can make the jump to informatics and experience a Phase Change as discussed by Douglas Robertson.

Basic science, especially physics, seems stalled in a variety of ways. String Theory is likely not the answer. Particle accelerators are too big, too expensive ($5B) and take too long to build and use; they are a clumsy approach, just the only current approach. With computing improvements, hopefully physics can make the jump to informatics and experience a Phase Change as discussed by Douglas Robertson.

Emergence has been touted as a panacea concept for the future of science for the last several years. Finally, some scientists are starting to explain with greater depth what emergence is and can provide to our study of science.

Robert Laughlin, in his March 2005 book, A Different Universe: Reinventing Physics from the Bottom Down notices the existence and necessity of the shift in scientific mindset and approach from reductionism to emergence. The shift has occurred somewhat due to the full exploration and ineffectiveness of reductionism. Focusing more on the abstract rather than the concrete is an important step since the next ideas are most likely significantly different paradigms than the current status quo. Assumes broad and innovative thinking.

Santa Fe Institute external faculty member, synthetic biology startup leader and 2005 Pop!Tech speaker Norman Packard points out that emergence is the name given to critical properties or phenomena that are not derivable from the original (Newtonian, etc.) laws; phenonomena such as chaos, fluid dynamics, life and consciousness (itself an emergent property of life). The idea is to think about new properties in ways unto themselves, not as derivations of the initially presented or base evidence. Assumes new laws and unrelatedness.

Robert Hazen, an Astrobiologist at Washington DC's Carnegie Institution, in his September 2005 book Gen-e-sis: The Scientific Quest for Life's Origins thinks that macro level entropy is symbiotic with micro level organization. Micro level organization (of ants to collective behavior; of axons and synapses to consciousness) is emergence. An interesting idea. Unclear if correct, but a nice example of larger-scale systemic thinking and the examination of potential interrelations between different levels and tiers of a (previously assumed to be unrelated) system. Assumes relatedness of seemingly unrelated aspects.

The point is to applaud the increasingly meaty application of emergence as an example of the short-list of new tools and thought paradigms required to make the next leaps in understanding physics and basic science.

Posted by LaBlogga at 8:22 AM 0 comments

Thursday, December 08, 2005

Expiration of convenient concepts like self and goals

A recurring theme from the DC Future Salons, promulgated by AI expert Ben Goertzel and others is the possibility that concepts such as self, free will/volition, goals, emotions and religion are merely temporary conveniences to humans (this is already quite clear in the case of religion as Antonio Damasio and Piero Scaruffi point out). That all of these concepts are temporary conveniences is an interesting, provocative and most likely correct idea.

A recurring theme from the DC Future Salons, promulgated by AI expert Ben Goertzel and others is the possibility that concepts such as self, free will/volition, goals, emotions and religion are merely temporary conveniences to humans (this is already quite clear in the case of religion as Antonio Damasio and Piero Scaruffi point out). That all of these concepts are temporary conveniences is an interesting, provocative and most likely correct idea.

Though the concepts have solid evolutionary standing as the fit results of natural selection, it may also be that they are anthropomorphic and highly related to the human substrate, and will prove irrelevant in the instance of uploaded and even extended human intelligence and/or AI.

How could this not be correct? We are only speculating about what the next incarnation/level of intelligence will be like. There are the usual partially helpful metaphors, that regarding motility, humans:plants as AI:humans and regarding evolutionary take-off, humans:apes as AI:humans. With the probable degree of difference between extended/uploaded humans/AIs and current humans, it is quite likely that crutch concepts like self and goals may not matter.

The interesting point is what will matter? Will there be concepts of convenience and metaphors adopted by intelligence v.2? If not directed by goals, what direction will there be? Will there be direction? Already humans have evolved to most behavior not being directed by survival but by happiness and for some, self-actualization. All are still goals. Will the human objective of the quest to understand the laws and mysteries (like dark matter) of the universe persist in AI? For an AI with secure energy inputs (e.g.; survival and immortality is reasonably assured), will there be any drives and direction and objectives?

Posted by LaBlogga at 5:16 PM 0 comments

Wednesday, December 07, 2005

Meme self-propagation improves with MySpace

Those memes are getting better and better at spreading themselves! Quicker than the posts and reference comments circling the blogosphere (already an exponential improvement over traditional media) is the instant distribution offered by the large and liquid communities on teen and twenties identity portal MySpace.

Those memes are getting better and better at spreading themselves! Quicker than the posts and reference comments circling the blogosphere (already an exponential improvement over traditional media) is the instant distribution offered by the large and liquid communities on teen and twenties identity portal MySpace.

MySpace is a website where users can create pages with blogs, photos and other creative endeavors and interact with others. Site designers have also successfully positioned and promoted MySpace as a music distribution site for small bands. The site is home to many mainstream and longtail communities, including 90,000 Orange County (TV show) fans and 23,000 Bobby Pacquiao (super-featherweight boxer) fans. Another benefit of MySpace, and a property of all user-created content sites, is going global instantaneously, Friedman's Flat World in action.

What will the next level of meme spreading tools be like? What should we (humans) create next? (While we still can!) How long will we be able to perceive meme-spreading platforms and be necessary participants as the transmission substrate?

Posted by LaBlogga at 4:45 PM 0 comments

Sunday, December 04, 2005

AI and human evolution transcends government

Autonomy (the experience and discussion of) in early metaverse worlds like Second Life is most interesting in the sense that this is presumably a precursor to what autonomy in digital environments will be like with fully uploaded human minds.

Autonomy (the experience and discussion of) in early metaverse worlds like Second Life is most interesting in the sense that this is presumably a precursor to what autonomy in digital environments will be like with fully uploaded human minds.

In the crude early stages of these metaverse worlds, an unfortunate theme is facsimile to reality. Digital facsimile to the physical world is evident in the visual appearance dimension; how avatars, objects and architecture look, in the dynamics of social interaction and community building, and in conceptual themes. The tendency is to recreate similitudes of the physical world and slowly explore the new possibilities afforded by the digital environment. Presumably, dramatically more exploration will occur in the future and in freer digital environments without as many parameters established by the providers.

Analogous to the physical world is the theme of the check and balance between autonomy and community in digital environments. There is freedom to a degree and norms and enforceable codes if norms and laws are not maintained. This is seen in all existing virtual worlds; Amazon, eBay, Second Life, etc.

In the near term, humans will likely continue to install and look to a governing body for the enforcement of laws. If their power base can be shaken, governing bodies will hopefully become much more representative and responsive (say the full constituency votes daily on issues via instant messenger). Governing bodies could also improve by being replaced by AIs who would not have the Agency problem.

Post-upload, physical location as a function of governance will be quite different, and, in fact, human intelligence can presumably evolve to a point of not needing external governance.

A separate issue is whether non-human intelligence will be the governor of human intelligence and this is probably not the case for several reasons; first, the usual point that machine intelligence finds human intelligence largely irrelevant, and secondly, machine intelligence understands that system incentives not rule governs behavior.

Posted by LaBlogga at 2:46 PM 3 comments

Thursday, December 01, 2005

Classification Obsession – Invitation to the Future

One almost cannot help noticing a theme in current mainstream thought – an obsession with classification. Are priests gay or not, or more precisely, how gay are gay priests? Are decorated public space trees holiday trees or Christmas trees? Should cities have trees – holiday, Christmas or whatever? Should researchers be allowed to donate eggs for stem cells? Is the war in Iraq a war in Iraq?

One almost cannot help noticing a theme in current mainstream thought – an obsession with classification. Are priests gay or not, or more precisely, how gay are gay priests? Are decorated public space trees holiday trees or Christmas trees? Should cities have trees – holiday, Christmas or whatever? Should researchers be allowed to donate eggs for stem cells? Is the war in Iraq a war in Iraq?

At the meta level, in an increasingly complex world, a struggle for classification, delineation and categorization is quite normal. Is there more meaning to the recent classification game than the natural human pattern finding tendency?

Classification is not just pattern finding/assignation but can also be read as an attempt at order imposition in an increasingly shifting and expanding world…an early warning sign of futureshock.

More than futureshock, classification is the first step in perceiving, assessing and absorbing new situations. It is humans grappling with the degree of newness available in the world today, the inevitable cant of progress and the invitation to participate.

Posted by LaBlogga at 8:40 PM 0 comments

Thursday, November 17, 2005

Increasing complexity of pop culture

Science popularizer Steven Johnson makes several interesting claims in his 2005 book, "Everything Bad is Good for You."

Science popularizer Steven Johnson makes several interesting claims in his 2005 book, "Everything Bad is Good for You."

Johnson makes a convincing case that pop culture, mainly TV and video games, have been getting increasingly complex in the last 30 years. An important distinction is not that the content is getting more complex (its not, content still generally panders to the lowest common denominator tendencies of fear, sex, violence, etc.) but rather that the format has been evolving - more thought is required to experience culture today than in the past.

TV has many more characters, story lines, social relationships (together called multi-threading) to track across episodes and unstated aspects that the viewer must fill in vs. being spoon-fed as in the Dragnet or Dallas of the 1970s where episodes were obvious and self-contained. The viewer must also filter, determining that specialized over-jargon (e.g.; as on the show ER) is not fully relevant to the plot.

Video games, Johnson asserts, require the use of higher cognition; applying probability theory, systems analysis, pattern recognition and nested problem solving. Video and other types of games are much more engaging than story/narrative because the viewer/user is the actor/experiencer, because strategy is the theme and because there are constant rewards (feedback we lack in our daily lives). There is sometimes little distinction between playing and watching a game (some brain scans have shown nearly identical activity for one viewing sports as opposed to playing sports; audience engagement can be a projection of self). Viewers put themselves in the position of the observed in video games, sports and reality TV, tracing strategies and how they would act and assessing the actions of others.

Johnson's analysis seems sound even when moving up to a broader level than was considered in the book. Evolutionary biologists, Steven Pinker and others, have long pointed out the importance of culture as one of the key aspects that separates humans from apes and it is obvious that culture evolves and has a strong impact on humans.

In the memetic context, evolving culture, and accelerating evolution of culture also make sense. Biology is hopelessly slow to evolve (in the heretofore known natural context), but culture, as some sort of proxy for human evolution and as an undivorceable aspect of human evolution is much more quickly evolvable. Lest we forget, culture is comprised of memes....which are capable of extremely quick and complexifying evolution.

Posted by LaBlogga at 9:35 AM 1 comments

Wednesday, November 16, 2005

Vive La France? All of continental Europe slipping

The riots in France are certainly a challenge but a problem that can be fixed. After two weeks of the continuing unrest, it is clear that this is the next and more intense level of immigration friction in Europe (Netherlands and Germany, and France previously have already had displays). While the friction is more than a surface problem, it is tangible and solvable, not necessarily easily, but it is clear that the French need to economically integrate immigrants into the national economy.

The riots in France are certainly a challenge but a problem that can be fixed. After two weeks of the continuing unrest, it is clear that this is the next and more intense level of immigration friction in Europe (Netherlands and Germany, and France previously have already had displays). While the friction is more than a surface problem, it is tangible and solvable, not necessarily easily, but it is clear that the French need to economically integrate immigrants into the national economy.

Recent tax legislation in Germany is a much larger problem because the instigators are the government leaders and they do not see that their actions are driving exactly the opposite of progress. The incoming German coalition government has been proposing and passing a variety of new taxation measures, the most prominent of which is a sales tax/VAT increase of 3% to 19% due to start in 2007. Greater income tax rates are also to be imposed. With stronger twin handcuffs on income generation and consumption, there is little economic incentive for anyone to do other than succumb to the ever-dwindling social programs of the state. Everything about these tax increases is the opposite of sponsoring incentive, ingenuity, innovation and progress and retards any hope continental Europe may have had in modernizing.

In addition to inadequate immigrant integration and Germany's new tax legislation, continental Europe is not making broad political and economic reforms and has not made the transition to the service economy that dominates the vanguard of the world's successful and evolving economies. Europe no longer has a competitive basis for manufacturing, only an historical precedent. With continent-centric short-term leadership myopia, there is a real concern that Europe will not be able to make the meaningful political and economic reforms necessary to move forward and is slipping dramatically on the stage of world competitiveness and influence.

Posted by LaBlogga at 1:35 PM 0 comments

Sunday, October 30, 2005

CIA futures report misses discontinuity

The National Intelligence Council, a division of the US CIA, conducts studies of future trends, the latest of which is called Mapping the Global Future and was published in December 2004.

The National Intelligence Council, a division of the US CIA, conducts studies of future trends, the latest of which is called Mapping the Global Future and was published in December 2004.

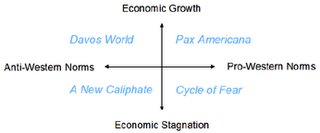

The report is a comprehensive effort by several hundred futurists offering four possible states of the world in 2020, mainly using the Scenario Planning methodology developed by the Global Business Network (GBN).

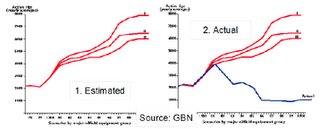

The point of scenario planning is to account for discontinuities that linear extrapolations of forecasting from the present cannot capture as demonstrated by GBN’s famous charts showing oil output 1980-1990 Figure 1. Estimated and Figure 2. Actual.

Mapping the Global Future is useful, in more than the Shirky-esque sense of not having similar alternatives, but is probably not broad enough to encompass the true future. Despite the National Intelligence Council’s use of scenario planning, many of the key issues articulated for 2020 are the same as those of today (the linear extrapolation fallacy of forecasting).

The study’s focus is broad with an emphasis on politics and military power. Consideration of the role of technology is particularly inadequate and uniformed given the accelerating pace of innovation and adoption and the heavily discontinuous aspects of technology. Security threats are discussed but not that the biggest ones in 2020 may be technological security risks. The scenarios are supposed to sound unlikely but possible; the scenarios presented in the study sound too shortsighted and obvious.

Some specifics from the study that do not quite resonate are that first, the study suggests that

Second, the study suggests that ethical issues such as Muslim/Western values cleavage and an important medical issue of today, HIV/AIDS, will still be at the forefront in 2020. This again seems superficial and may or may not be so, but should have more extensive supporting analysis. Ethnic friction and AIDS do not even seem to be a linear extrapolation of today but just status quo.

Third, the study's prognosticated marginal improvement in the condition of women in 2020 ignores the discontinuous sociological shifts cultures have experienced regarding economic wealth, fertility decisions and non-traditional family structure as education and work force access increases; even if women may be competing unfavorably for jobs in youth bulge cultures, they will be utilized in senior bulge cultures.

One of the study's most interesting projections, though under-highlighted and under-expanded, is that connectivity (e.g.; the connectiveness of the Internet, technology and the global economy) strains nationstates in 2020. Strains is probably a light word for how connectivity will have acted on the nationstate by 2020, depending on amongst other factors, how directly commercial interests pay for and administer their policing and security. The bifurcation between the globalized tech savvy creative class and everyone else bears more consideration on future politics, the issue being that not all power bases in the “everyone else” category can or will be integrated into the world economy.

Detailed Scenarios from the Mapping the Global Future study:

The study envisions four possible states of the future world, mainly concerning static political domination (again no consideration for quasi-discontinuities like dynamic cyber-communities with rotating issues influence as a function of voting support and expertise): Davos World (China and India non-Westerners dominate the leadership and participation in the world economy), Pax Americana (US still in hegemonic world political, economic and technological control), A New Caliphate (radical Muslim religious identity politics with anti-Western ethics dominate the world) and the Cycle of Fear (Orwellian world of fear and security, presence of bio/other terrorism, nuclear proliferation dominate the world).

Posted by LaBlogga at 9:35 AM 0 comments

Saturday, October 29, 2005

Next gen information cloud

The personal information cloud is a new and interesting way of looking at information, different than looking at a list of key words as a meta or abstract of a data set and different than scanning the data itself (e.g.; series of blog posts, articles, other written word formats, etc.). The information cloud is the picture that tells a thousand words. It doesn't matter how large the data set is, the important coverage areas can be seen at a glance (assuming appropriate user tagging) by the size and presence of key words.

The personal information cloud is a new and interesting way of looking at information, different than looking at a list of key words as a meta or abstract of a data set and different than scanning the data itself (e.g.; series of blog posts, articles, other written word formats, etc.). The information cloud is the picture that tells a thousand words. It doesn't matter how large the data set is, the important coverage areas can be seen at a glance (assuming appropriate user tagging) by the size and presence of key words.

One example of the information cloud, though not maximally visually expressive is on the right of the screen here. It is pretty clear that "Flash" content is one of the most prevalent areas in Bob's material.

Some of the most interesting aspects of the information cloud are the next steps:

1. Machine Tagging

When can machine tagging or application auto-tagging begin more robustly? Blogs and other websites should have a clickable information cloud button at the top so the entirety of their content can be assessed at a glance with the information cloud auto-summarizing tool. Machine tagging under another name probably already occurs in the indexing algorithms of Google and others. A precursor to machine tagging does exist now in the sense of suggested tags if the webpage/information has already been tagged in that service, as with del.icio.us.

2. Conceptual Tagging

Key word tagging is just the beginning. The next level is conceptual tagging. The meta meta of key words. It would be a field day for librarians and information managers. Conceptual tagging may involve subjective judgment. One person might tag a passage of text as socialist or empiricist or whatever to the disagreement of others. A wiki-type group editing tool could settle differences.

3. Group Tagsets as an Educational Tool

There can be different tag sets for different groups or eras. Applying today's key word and conceptual tagset to historical eras would be different than what that era would have applied, for example, today's views would quickly assess sexism and racism in previous eras which would not have been called out then. It would be an interesting educational tool to try on the tags (regular key word and conceptual) of different groups, for example, trying on the GOP or Democrat tagset views or Muslim and Israeli tagset views would be an interesting way to understand the worldviews of these groups to a greater extent and compare and contrast their perceptions.

4. Functional Tagging

Another variation of conceptual tagging is functional tagging, for example tagging "scissors" not as "scissors" but by their function, "cut." There can be many different classification systems for different applications.

5. Interacting with your Physical Personal Information Cloud

The personal information cloud is an idea that right now only applies to our online identity in certain limited segments, like our social bookmarking activity. Imagine a cloud of words surrounding your physical body, bouncing like avatar conversation, growing or shrinking as you emphasize different thoughts and ideas. One cloud might accompany you to work for example, and other clouds to other settings. What about being able to access a mobile information cloud that surrounds you, wireless data motes at the ready.

6. Visible Personal Information Clouds as a Communications Tool

What about being able to selectively permission your information cloud to be visible to others. Seeing someone's full life information cloud is being able to see a proxy for their value system, the ability to know what is important to them. This would allow for deeper more meaningful communication than our current primitive verbal streams and body language perceptions. Seeing that someone sorts on information and ideas, for example, would suggest that an effective communications approach would not involve telling stories about people but rather citing data, and the converse would also be true.

Posted by LaBlogga at 5:24 AM 0 comments

Friday, October 28, 2005

DOD PR in the Tech Community

The previous post discussed military plan author and DOD Office of Force Transformation representative Thomas Barnett and his dream of a US-administrered global police force. He has not been the only one working the tech conference circuit, Greg Glaros of the US Navy spoke at Supernova (touting a new carbon-fiber hull vessel with electronic keel which reabsorbs its own wake among other things) and JC Herz of DARPA and the National Research Council spoke at O'Reilly Media's Where 2.0 conference earlier in 2005.

The previous post discussed military plan author and DOD Office of Force Transformation representative Thomas Barnett and his dream of a US-administrered global police force. He has not been the only one working the tech conference circuit, Greg Glaros of the US Navy spoke at Supernova (touting a new carbon-fiber hull vessel with electronic keel which reabsorbs its own wake among other things) and JC Herz of DARPA and the National Research Council spoke at O'Reilly Media's Where 2.0 conference earlier in 2005.

With all the technology involved with military equipment, it is logical that representatives of the US military would be speaking at tech conferences as a norm, but the frequency seems to have stepped up in proportion to the declining support for the war in Iraq. Also, the talk material and tone is positioned as public relations, marketing and propaganda; there is more content about building support for the military's strategic plans rather than discussing the technical details. It seems to be a misguided attempt on the part of the government and a programming oversight on the part of tech conference organizers.

Posted by LaBlogga at 3:00 PM 0 comments

Thursday, October 27, 2005

Thomas Barnett's imperial global police force

Thomas Barnett discussed his new book, Blueprint for Action, at the October 26 Second Life Future Salon and, taken with the venue, blogged extensively about the event. Blueprint for Action is the implementation and expansion of ideas Barnett initially proposed in an earlier book, The Pentagon's New Map. Or, the rationale for the US to lead a global police force in its last gasp for power in the new, new world.

Thomas Barnett discussed his new book, Blueprint for Action, at the October 26 Second Life Future Salon and, taken with the venue, blogged extensively about the event. Blueprint for Action is the implementation and expansion of ideas Barnett initially proposed in an earlier book, The Pentagon's New Map. Or, the rationale for the US to lead a global police force in its last gasp for power in the new, new world.

Barnett can be chiefly complimented for coalescing a few key ideas from the geopolitical conceptual milieu of the present and last several years. He can also be applauded for his long-term vision, critical thinking about how could and should the international political economy evolve over the next several decades, and his concrete action plan.

Barnett's construct of the world is that there are Core and Gap countries. The Core is the traditional West plus the new Core, China and India. Gap countries are the two billion people disconnected from the Internet and the global economy, the lesser developed economies with bankrupt political leadership. His astute analysis highlights the shift in the dynamics of war and peace; that step one is a rapid, high-tech military strike followed by step two, a much longer and costly nation-building phase. Different teams, skills and knowledge are required for the two phases. He rightly points out that the global merchant class (e.g.; MNCs) should be willing to pay for the global warrior class (e.g.; military, nation-builders) that defends them.

One of the main challenges to Barnett's plan is the US political, economic and cultural imperialism inherent in the plan, unpalatable to both Core and Gap countries, especially at a time when the US has low credibility and initiative support on the international stage. Barnett's response to imperialism charges is to focus on the connectivity (e.g.; connecting Gap countries to the Core), not social Darwinism but there are many more nuances in international affairs decisions than pure commercial gains, security concerns and military strategy.

The main audiences for Barnett's work are US and international military organizations, government agencies and other government representatives. His WashingtonSpeak does not go over well with the liberal tech crowd and they are unlikely to get heavily involved with his initiatives. Techies have long had disdain for the political process and many are planning for a very different kind of tomorrow with singularities and uploading as opposed to the antiquated power jousting of old world nationstates; technology trumps politics or at minimum politics lags technology.

Certainly the linear future, logical possibilities flowing from the current world, needs to be planned for and shaped and Barnett is a visionary with a concrete plan, if a bit imperial and control-oriented, he just should not be surprised if leftist tech audiences do not rise up in support.

Posted by LaBlogga at 10:17 AM 0 comments

Wednesday, October 26, 2005

Future of gender

Futuristically, in the memescape and world of ectogenesis, gender theoretically has less meaning. In the memosphere, only good memes matter, not their source. In digital metaverse worlds, avatar gender is irrelevant for actualization and collaboration activities (though highly relevant for some other activities), congruity between avatar gender and operator gender would be relevant to some operators and irrelevant to others. In many ways in the present physical world, gender should be irrelevant but results in incorrect bias as Malcolm Gladwell points out in "Blink."

Futuristically, in the memescape and world of ectogenesis, gender theoretically has less meaning. In the memosphere, only good memes matter, not their source. In digital metaverse worlds, avatar gender is irrelevant for actualization and collaboration activities (though highly relevant for some other activities), congruity between avatar gender and operator gender would be relevant to some operators and irrelevant to others. In many ways in the present physical world, gender should be irrelevant but results in incorrect bias as Malcolm Gladwell points out in "Blink."

Our current understanding of gender and gender development is still rudimentary but being elucidated with some recent medical research.

Gender is even more divisive than race and ethnicity. It is generally only socially acceptable to be male or female. Medical research documents that 1-2% of the population displays some sort of intersexing, a logical and expected phenomenon of biological variation and natural selection. Intersexing is an incongruency in the six levels of how gender is expressed in a person.

Science writer Deborah Rudacille in "The Riddle of Gender: Science, Activism, and Transgender Rights" articulates the six levels of human gender expression and their possible values: Chromosomal (XX, XY, XXY, XYY, etc.), Gonadal (testicles, ovaries or a mix or testicular/ovarian tissue), endocrine (dominance of androgen or estrogen), Morphological (body shape, body fat distribution and facial hair), Genital (penis, vagina, varied stages of formation of both or neither) and Gender Identity - how someone is sexed in the brain, perceives his/her gender.

Formerly, the practice was for the parents to select a gender category with attendant medical treatment for intersexed children early in life. The practice of letting the child grow up and decide to be male/female/other with attendant medical treatment is now realized to be more humane. Intersexed or transgendered persons are trying to have GID (Gender Identity Disorder), as it is now termed, removed from the DSM (Diagnostic and Statistical Manual of Mental Disorders) and reclassified from a psychological disorder to a physiological condition. Homosexuality was removed from the DSM in 1974.

Interestingly, the emerging gender research has also found that genes may sex the brain in the first few weeks of embryonic development as opposed to at the 2 month mark when the default female fetus is bathed in androgens if the baby is to be male.

As people start to believe that gender is heavily based in physiology, and gender becomes increasingly less important and unseen in our physical and digital world activities, gender will have less relevance in that sphere of great human import - idea formation.

Posted by LaBlogga at 7:44 AM 2 comments

Tuesday, October 25, 2005

Early for digital world business opportunities

There are three different levels of business opportunities in digital worlds:

There are three different levels of business opportunities in digital worlds:

1. In-world economies

Digital world residents are providing products and services to each other. It might seem that there is a lot of opportunity given the substantial economic activity but the range of possibilities is limited. The magnitude of economic activity can be seen in the daily conversions from Linden Dollars or Simoleans to US dollars and the trading volume on eBay and digital currency exchanges.

However the scope of this activity is limited to a set of in-world products and services such as real estate, escort/sexual services and product sales (clothing, hairdos, home furnishings, other objects). The market size will grow as more people subscribe to digital worlds. The market scope will grow if functionality is added to the worlds, also if new user groups come online.

2. Physical world businesses marketing to digital world customers

Physical world companies are starting to go online to market themselves and their services to digital customers. A recent example is Wells Fargo marketing financial services to Gen Y through its entertainment portal Stagecoach Island in Second Life. The digital world customer market is still small, in October 2005, Second Life had 60,000 total worldwide subscribers and presumably fewer who are active on a regular basis.

The cost for physical world companies to establish a digital world presence is minimal in the scope of their overall marketing budget and the brand cachet of being online would likely drive physical world sales. There is also a bit of the land grab idea, companies sensing a great potential future payback for being an early mover in establishing a digital world presence.

Customer avatars could interact with store avatars for pre-shopping and/or discounted sales and companies could assess mini-launches of new products in a low cost way in digital environments.

It is actually surprising that Gen Y commercial brands such as Abercrombie and Fitch, MTV, car companies, etc. do not have digital world presences yet. Part of this can be explained by the still poor visual rendering quality for direct product viewing and buying decisions, but the abstract aspects and customer research in the sales process can occur online now. Another corporate consideration would be the cost and effort required to keep online content fresh.

As every company now has a website, maybe they too will have a digital world presence in the next several years where customers can come to find out about the company and interact with virtual sales staff or other corporate information representatives, for example for a job interview screening or business development partnership meeting. With a corporate presence, companies could have internal meetings in-world as well, an interesting alternative to video conferencing and conference calls.

Further, digital worlds could be an interesting exploration for physical world companies in service delivery. In the financial services example, would clients be more willing/comfortable to discuss the intimate details of their financial situations through their avatars than in person? Would an investment advisor be able to pick up unspoken clues from an avatar meeting? Avatar meetings preserve intimacy and depersonalize interaction in a positive way, maybe making advice more objective.

3. Physical world businesses bring physical world customers in-world

Given the current technical, economic and behavioral inaccessibility of digital worlds (see "Hurdles to faster metaverse deployment") to mainstream consumers, it is too early and too limited for physical world entities to bring their physical world audience online for their products and services. One obvious future application is learning, there are tremendous benefits of using a digital environment as a learning destination, including the ability to collaborate in a non-interruptive multi-channel way. Distance learning platforms such as those designed by market leader eCollege may merge with digital environments.

Conclusion

In-world economies are limited in their current mode but expandable as additional functionality and user groups area added. It is early for physical world brands to move online, but those with the Gen Y focus or aspiration should be establishing presence in the digital world. These are the halcyon digital days where Coke, Lexus and Cingular are not yet plastered around the digital environment, but online merchants have plastered their own advertising around.

It is sad though expected that the current digital worlds have such a heavy component of commercialization. As other entities provide online worlds, perhaps different worlds will become known for different attributes; e.g.; CollabWorld, LearningWorld, PhysicsWorlds to test different laws of physics, etc. It is entirely possible that Second Life and Sims Online will not be around in the next several years, having been superseded by the next metaverse platform(s).

Posted by LaBlogga at 7:19 AM 0 comments

Monday, October 24, 2005

Meme dualism

As with genes, the key role of memes, from the meme's eye view, is to replicate.

As with genes, the key role of memes, from the meme's eye view, is to replicate.

With the blogosphere, RSS aggregators/readers and Web 2.0 tools like del.icio.us, technorati and flickr, memes are replicating faster than ever and being sent to thousands of individuals upon each post. However, replicating and sending does not ensure reading. RSS readers, technorati and del.icio.us actively draw information to individuals based on subscriptions and key words requests, but with information overload, it is possible that many posts are never read and that many memes are never spread.

A meme is not spreading successfully unless it is read, by a being with cognizance, currently human, and passed on, possibly with variation and expansion. Memeticists might argue that per Darwinian evolution, the best memes are able to compete successfully for human attention and the ones that go unnoticed and deleted in RSS readers, etc. are not the fittest. Also that the proliferation and replication of many memes is better than less proliferation since there is a greater chance for fit memes to survive and thrive. This assumes the quality filtering the human must do is not detrimental to his/her absorption of fit memes.

Memes are still quite dependent on their symbiosis with the human substrate.

Posted by LaBlogga at 5:56 AM 0 comments

Friday, October 21, 2005

Mind the widening technogap

The fast pace of technology development and proliferation simultaneously creates gaps and provides tools for closing them. China and India will presumably industrialize more quickly and efficiently than Europe and the US did. Other quintessential examples are MIT's Open Course Ware project making free courses available online for anyone who wishes to follow them and the leapfrogging of technology generations such as going straight to cellular phone networks rather than copper wire networks in developing world telephony.

The fast pace of technology development and proliferation simultaneously creates gaps and provides tools for closing them. China and India will presumably industrialize more quickly and efficiently than Europe and the US did. Other quintessential examples are MIT's Open Course Ware project making free courses available online for anyone who wishes to follow them and the leapfrogging of technology generations such as going straight to cellular phone networks rather than copper wire networks in developing world telephony.

The technogap is changing - previously the digital divide meant developed vs. developing world. Now with broadband access, the Internet and globalized trade and culture, the new digital divide is global technophiles vs. technophobes. The technosavvy creative class has transcended nation states, but their brethren are still entrapped by local challenges.

The technophiles are rocketing to success. Now is a blank slate time like the US in the formative 1800s, those with initiative are seeing and acting on opportunities for advancement, while others are being left far behind and will not even know where they are when they awake because so many aspects of the world will have changed so much. Re-inspiring initiative is the most pressing cause du jour, not providing access to technology.

Posted by LaBlogga at 2:02 PM 0 comments

Thursday, October 20, 2005

Privacy is actually increasing

From the usual varied speakers on the first day of the Pop!Tech conference, one macro theme related to privacy emerged, the inevitable decrease of privacy as a result of technological advances.

From the usual varied speakers on the first day of the Pop!Tech conference, one macro theme related to privacy emerged, the inevitable decrease of privacy as a result of technological advances.

Even though privacy may be decreasing in some ways, it is increasing in others. It would also seem that politics has diminished more privacy recently than technological advances, however the future may be different.

Even though cams may observe one's every move in the physical world, there is considerably more freedom and anonymity in digital worlds, initially with message boards, email lists, chat rooms and now with 3-D environments like Second Life. While adults might be reticent to don a full-size raccoon suit and frolic in their physical neighborhood or specialized bar, they apparently have no trouble joining twenty of their plushy friends to do so online.

Privacy is a perception. The concept of privacy varies tremendously across cultures, for example Americans quickly disgorging personal details of their lives to chagrined Europeans and the full extended Middle Eastern family getting involved in decisions pertaining to one family member to the surprise of Westerners.

Another flavor of cultural differences in privacy stems from a society's focus on the individual or the group. The West's emphasis on individualism probably emphasizes privacy too, whereas Asian cultures with more emphasis on groups may have a broader perception of privacy.

Personal space norms have also influenced our notion of privacy. In the US, with the last several generations, the amount of space available to each person in each new generation has been expanding. Today's teenager cannot image not having his/her own room whereas one family may have had one room a few generations ago. Interestingly, this trend is already reversing as the densities of cities are increasing. Also interestingly, mental space has been increasing more quickly than physical space is now decreasing. With the Internet, entertainment options and free time available, there is a lot more space for one's mind to go.

Physical world privacy is being diminished and this trend will continue especially with the advent of continual video recognition technology, for example, having the search results and profile of a person walking toward you on the street instantly appear in your heads-up display. One hopes the benefits outweigh the detriments, and simultaneously recognizes the technology's inevitability. In addition, in important thought and behavioral ways, privacy, freedom and anonymity are increasing and will probably exponentiate, particularly with immersive simulations and other digital experiences.

Posted by LaBlogga at 3:33 PM 0 comments

Wednesday, October 19, 2005

Future of Telecoms

It could finally be time to divest landline telecoms (e.g.; Verizon, SBC, BellSouth) as a core portfolio holding. Their prices have plummeted (down 26%, 14% and 11% respectively) vs. the S&P 500 (down 2%) so far this year. The price declines have made telecoms' 5% dividends look attractive, but dividends cannot make up for the capital depreciation and the long-term fundamentals of the businesses are in jeopardy.

It could finally be time to divest landline telecoms (e.g.; Verizon, SBC, BellSouth) as a core portfolio holding. Their prices have plummeted (down 26%, 14% and 11% respectively) vs. the S&P 500 (down 2%) so far this year. The price declines have made telecoms' 5% dividends look attractive, but dividends cannot make up for the capital depreciation and the long-term fundamentals of the businesses are in jeopardy.

The behemoths re-agglomerated (in stages, the cellular stage being nearly complete now) after being separated in the 1982 antitrust split-up of AT&T. The problem is the shift in future business dynamics in the form of viable substitute services. Cellular revenues and profits have been driving telecom companies for the last few years and propping up the landline business. DSL has not manifested profits.

Landline telecoms were always a portfolio staple because they owned the last mile and the residential customer. For the first time, it actually seems possible that traditional carriers (also called ILECs) can be displaced. Residential customers need voice and data. With cellular phones, Skype, open source PBX Asterisk and other Internet-based telephony services, local landline service can disappear; an unofficial estimate of US VoIP users in 2005 is 2 million. With wireless broadband, currently early stage but with increasingly likely widespread availability in a 5 year timeframe, DSL can be supplanted. Cable internet service currently has a one third market share.

Business customers need voice and data too and have traditionally been customers of large global carriers (IXCs) like AT&T and Sprint. However, AT&T and Spint have also gone the way of the dinosaur with their respective mergers with SBC and Nextel.

Posted by LaBlogga at 2:08 PM 1 comments

Tuesday, October 18, 2005

Majority of boomers able to experience Power Years?

Long-time gerontology expert Ken Dychtwald in his new book, the Power Years, suggests that aging boomers are not entering retirement but rather beginning their power years. The boomers are powerful because they have more money, experience and wisdom than they have had at any other time in their lives. However, they seem to have fewer opportunities at their finger tips.

Long-time gerontology expert Ken Dychtwald in his new book, the Power Years, suggests that aging boomers are not entering retirement but rather beginning their power years. The boomers are powerful because they have more money, experience and wisdom than they have had at any other time in their lives. However, they seem to have fewer opportunities at their finger tips.

Dychtwald rightly suggests that with the increase in life span, 20-30 years of traditional retirement will not be interesting, stimulating or financially possible for most boomers. Instead, they will seek to reinvent themselves in another career or volunteer opportunity.

It may be quite challenging and unpalatable for boomers to step into another career. Attending a class at the local community college will not create real understanding and experience with new fields or familiarity with modern work force culture, values and mindsets. Boomers will start to appear more often in lower-paid service jobs for which they will have to out-compete immigrants and youth.

Even if a boomer completes the significant amount of effort required to be skilled in a field of the day, say, enterprise software programming or flash web design, and bests hiring ageism, starting at entry or low level may seem like an unrewarding match for the boomer's perceived life skills and experience.

It will need to be truly evolved boomers who can accept greatly reduced status, power and remuneration in their new work force roles. Unfortunately financial exigencies will drive behavior but the under-actualization is an unfortunate personal and societal side effect. It would be nice if corporations would adjust to adequately challenge, reward and utilize boomers who are short on recent technical skill sets and long on wisdom and life experience. However in reality, the boomers are not qualified for what the corporations need and it is hardly usual corporate behavior to accommodate under-qualified workers, it is usually the work force not the employer that does the adapting. Even though there will be an increasing shortage of workers, it will probably continue to be met by outsourcing and automated productivity gains.

The point is that there is an opportunity to help boomers (and really anyone) to actualize. Actualize in the sense of using one's unique skills and competences in a context larger than one's self involving creativity and making a real external contribution. It seems that actualization opportunities for boomers decrease upon their leaving the work force and though everyone has the responsibility to actualize (including figuring out how), current societal and institutional venues for boomers to contribute their real capabilities are not obviously present and there is a tremendous opportunity to create them.

Posted by LaBlogga at 10:30 AM 0 comments

Monday, October 17, 2005

Macro and micro science pursuits necessary

Sometimes it seems that advances in macro scientific fields such as astronomy, cosmology, astrophysics and astrobiology are less possible and more costly and time-consuming than advances in micro fields such as semiconductors, nanotech, quantum mechanics and even biotech. About the same number of log scale ratchets occur going up to the macro scale as down to the micro scale but somehow macro scale projects seem more objectionable. Recent failures in the US shuttle program and in rocket launches have also drawn attention to the high cost per project, the risk and that the reward may not be commensurate.

Sometimes it seems that advances in macro scientific fields such as astronomy, cosmology, astrophysics and astrobiology are less possible and more costly and time-consuming than advances in micro fields such as semiconductors, nanotech, quantum mechanics and even biotech. About the same number of log scale ratchets occur going up to the macro scale as down to the micro scale but somehow macro scale projects seem more objectionable. Recent failures in the US shuttle program and in rocket launches have also drawn attention to the high cost per project, the risk and that the reward may not be commensurate.

In fact, challenges exist in the pursuit of both macro and micro science and even if there is not a positive ROI on each project, the overall contribution to science and human knowledge of both kinds of projects is important. The competition for funding does not suggest that all funded projects are broadly worthwhile but at least acts as some sort of gating factor, this is even more true in the current era of scarcer funding. Science is turning to philanthropy and other non-grant capital sources more regularly than before.

Next phase tools and methodology breakthroughs are needed at both ends of the spectrum; for example, accelerator technology, with the purpose of creating and detecting some of the smallest particles, is close to the limit for future effectiveness at the $5b current price tag for new accelerators. A new and better means of creating, colliding and observing particles is necessary, perhaps by machine simulation. At the macro end, anything that would compress the time, cost and in-mission reusability of all manner of space missions, particularly telescopes, would be helpful.

Posted by LaBlogga at 11:13 AM 0 comments

Saturday, October 15, 2005

Hurdles to faster metaverse deployment

After some extensive exploring around in social MMO (massively multiplayer online) Second Life, it is more clear than ever that metaverse worlds are in Mosaic (e.g.; in the phase of the Internet at the time of the Mosaic browser – the early days). Unfortunately, not Mosaic in the exciting sense of the big pop being around the corner but in the somewhat disheartening way of how early stage the Internet 2.0 metaverse worlds are.

After some extensive exploring around in social MMO (massively multiplayer online) Second Life, it is more clear than ever that metaverse worlds are in Mosaic (e.g.; in the phase of the Internet at the time of the Mosaic browser – the early days). Unfortunately, not Mosaic in the exciting sense of the big pop being around the corner but in the somewhat disheartening way of how early stage the Internet 2.0 metaverse worlds are.

The three main challenges to faster metaverse world deployment are 1) platform inaccessibility, 2) disappointing chatroom culture and 3) myopic video-gaming niche visioning of world creators.

1. Platform inaccessibility to the mainstream

The first hurdle is meeting the system requirements of having a high end processor and graphics card and broadband.

Second, beyond rudimentary walk around and avatar adaptation functionality, there is a steep technical learning curve and economic cost for participating in a meaningful way. Digital graphic design, object creation and programming/scripting skills are necessary to be creative. Like the multi-year wait for tools allowing non-html experts to develop web pages, one or more generations of technical and knowledge-building tools will be required to allow non-expert users to be creative in digital environments.

Third, one must actually spend real money in the world (even to access the environment as with the Sims Online and There; Second Life’s participation jumped when they made an environment login free) to buy and develop real estate and post it in the search engine and teleport people there. Money can also be used to purchase avatar clothing and objects but this robs the participant of creativity.

Platform inaccessibility restricts the community of participants to a small group of technical experts and excludes other individuals, groups and institutions.

2. Disappointing lowest common denominator and homogeneous online culture

Digital environment culture is disappointing in three main ways: behavior, appearance and activities.

First, in some sense, Second Life, the Sims Online and There are no more than their lowest common denominator, manners-free 3-D chatrooms.

The openness of the interactive medium is an important parameter but there is a social code of respect called for in specific venues, such as education classes. Interruptions can be in the form of distracting avatar behavior, irrelevant posts to the chatstream and offensive posts to the chatstream. This could be partially handled by giving the session leader optional control of the chat window and to screen comments so that questions related to the session are included but extraneousness is not included or posted to a secondary chat window.

Second, avatar appearance is surprisingly homogenous especially compared to the physical world where we have less control over many of our appearance attributes. Clothing and accoutrements are heterogeneous but shape, size, hair styles and age all display tremendous homogeneity. There are many further social science explorations possible regarding avatars and some early research on avatar construction is coming out, but here the point is citing the homogeneity.

Third, activities and venues are primarily related to commercial activities, sex and gambling. While not unexpected, the narrow slice of activities retards new users. The point is that the digital environment is a platform to develop and launch whatever activities one would like but new community members will start by joining not creating. Sex as a big focus area helps to explain the previously mentioned avatar homogeneity.

That the wide-ranging potential of their platforms is under-realized by the world developers is clear from the market positioning which narrowly targets video-gamers, skilled software graphics developers and software programmers. Clearly it is necessary to market to early adopters first, but these companies are moving too slow in addressing subsequent target market segments.

Perhaps the most important way the video-game mentality retards news users is in the attitude towards knowledge and skill development. Everything about the user experience has an attitude of anti-help. Instead of the obvious “Second Life for Dummies” move-to-the-mainstream helper, help is scarce and non-intuitive. As with video games, a user needs to use forums, fansites, blogs and talking to other players as a means of learning and getting help even on basic topics. The mentality is to figure it out as you go along, that the fun is in figuring it out. This approach can be followed by the users who prefer this approach; the mainstream takeoff would be speeded by more accessible help.

Posted by LaBlogga at 9:16 AM 0 comments

Friday, October 14, 2005

Podcasts facilitate meme transmission

Podcasts, basically non-music audio files, have gone mainstream. There are two main types of podcasts: an audio replay file of content that was delivered (e.g.; lecture, conference) or broadcast (e.g.; radio shows) elsewhere, and content that was created specifically for the podcast alone. It will be interesting to see how many of this second type of podcast will be re-broadcast over radio or other media, though perhaps not many.

Podcasts, basically non-music audio files, have gone mainstream. There are two main types of podcasts: an audio replay file of content that was delivered (e.g.; lecture, conference) or broadcast (e.g.; radio shows) elsewhere, and content that was created specifically for the podcast alone. It will be interesting to see how many of this second type of podcast will be re-broadcast over radio or other media, though perhaps not many.

A rough history is that podcasting began circa 2003 with IT Conversations and others making conference audio transcripts available. Then a continually growing number of commentators started making daily or weekly podcasts; these are basically individual talk radio shows. Traditional broadcast media then got involved, making radio shows available in audio files and creating specific podcast material like CNET's Podcast Central. As many traditional media companies have now incorporated blogging (though with underwhelming quality) as an imperative delivery format, they have also adopted podcasting; Newsweek, the Wall Street Journal and nearly every other major publication now podcast. Now even some corporations have convinced themselves of a podcasting imperative as Forrester's Charlene Li cites Whirlpool's podcasting activity, though this is more likely part of the corporate marketing trend towards more subtle advertising.

As with nearly anything, there is quality dispersion with a small percent of material being relevant and interesting to any particular person, but further underlining the individualized media trend with potentially a different set of podcasts being relevant and interesting to each particular person.

Early podcast aggregators like Podcast411 and Yahoo Podcasts (launched Oct. 2005) are not particularly useful yet because both the specific content and quality of listed podcasts is unknown. Like other sites, Yahoo plans a user-based ranking to help identify popular podcasts but another level of tagging is required to stratify the body of podcasts in more meaningful ways. A more helpful tagging system would identify attributes such as the level of erudition, degree of memes vs. personal blather and depth and breadth of focus of podcasts. At least it is now possible to search audio pod streams per PodScope and Blinkx.

Podcasts have gone mainstream because 1) a large number of people have portable MP3 players devices on which to play podcasts, although some percent of podcasts are still consumed via computer stream, 2) it is relatively easy to subscribe to an ongoing stream of podcasts, 3) podcasts are cool, the tech savvy have to be involved in the phenomenon either or both as creators and listeners and most importantly, 4) podcasts are useful and informative, a fantastic meme transmission technique in this day of the actualization hungry creative class.

Waxing McLuhan-esque for a moment, do we now consume more media since there is more? Probably only to the extent that consumption tools make it easier to consumer more media; there are still the same number of hours in a day. With more radical adjustment of humans and life activity organization, meme consumption will likely increase in the future. Now, it is clear that MP3 players offer more efficient consumption than broadcast radio with the capability to forward through ads and slow portions. Yahoo is apparently offering a setting for speeding up the transmission speed (although largely useless given that only applies in the Internet Explorer browser). Since nearly all podcast material is under Creative Commons licenses, humans or hopefully some more automated mechanism can create MetaCasts, a higher level summary of the key points in a category of podcasts such as a conference, topic area or segment of time. The efficient meme compression in MetaCasts would allow more media to be consumed and other mixing techniques should also be explored.

Vive podcasting and bienvenue aux techniques for increasing the quality identification, compression and transmission of memes via podcasting. Hopefully a similar revolution in video content searchability, accessibility, mixability and creatibility will follow shortly.

Posted by LaBlogga at 6:39 PM 0 comments

Thursday, October 13, 2005

Self-help's new name...intelligence amplification

The October Bay Area Future Salon, discussing the Age of Anxiety and the tone of the Intelligence Amplification day at the Accelerating Change 2005 conference are two indicators of the trend to invite into science what was more traditionally narrowly segmented as self-help.

The October Bay Area Future Salon, discussing the Age of Anxiety and the tone of the Intelligence Amplification day at the Accelerating Change 2005 conference are two indicators of the trend to invite into science what was more traditionally narrowly segmented as self-help.

One reason for this is that many approaches to AI require more than just brute computation. Layering emotion onto computation or other ideas are thought to be necessary for creating a true general intelligence. Scientists have therefore had to get more interested in how human emotion functions in order to attempt to reconstruct, catalyze or build AI. Another reason is that futurists, in a panic of possibly not living long enough to upload (that glorious but illusive future, always 20-30 years away) have reappropriated and reshaped self-help materials to help themselves live as long and healthily as possible in case of singularity delay.

There is an argument that self-help has become more science-like but it is not clear that there is actually more science (e.g.; properly conducted experiments with statistical results) in for example the poster child books of the area, Mihaly Csikszentmihalyi's Flow: The Psychology of Optimal Experience and Martin Seligman's Authentic Happiness than in self-help books. The main reason techie readers bit was that both authors are academics. The academic veneer suddenly meant it was acceptable to discuss concepts like happiness (e.g.; the number one human goal) with scientific peers.

With self-help being retitled intelligence amplification, spirituality has also started its slow road from taboo science topic to discussability. Traditional flavors of faith, atheism and new age are being upleved and Starbucksified as boomers explore and techies collaborate for collective consciousness. One example is Steve Case's Revolution, an organization which, though not overtually spiritual, has shades as it seeks to shift power to consumers, bringing more (after all, this is America) choice, control and convenience to [initially] resorts, living and health.

Whatever the reasons and excuses needed, the actions and probable mindset openings that result from incorporating self-help and spiritual ideas cannot help but be positive. There is certainly always opportunity to re-name and re-market concepts as a sign of the changing times.

Posted by LaBlogga at 12:32 PM 0 comments

Wednesday, October 12, 2005

Conference fatigue stifles ideation?

The slate of must-attend conferences this fall has been intense, from Accelerating Change to Web 2.0 to State of Play to the Second Life Convention to Pop!Tech to the Serious Gaming Summit. At least Pop!Tech will have free live streaming.

The slate of must-attend conferences this fall has been intense, from Accelerating Change to Web 2.0 to State of Play to the Second Life Convention to Pop!Tech to the Serious Gaming Summit. At least Pop!Tech will have free live streaming.

Not only is there an abundance of conferences, a lot of the same speakers and the same ideas are at all the conferences. The speakers have trouble keeping pace too, new or even refreshed ideas have been rare.

Certainly there are lots more people doing interesting speaker-worthy things but no one knows about it and they are not getting picked up on the conference grid, or of course maybe they are too busy doing interesting things to speak about doing interesting things.

Conferences are still a great place for synergies and new ideas but the pressure is on organizers to keep them fresh and widen the context and definition of interesting speakers. Also, maybe other formats besides the wrote formula of speaker lecture + Q&A could be explored like simulation, group workshops with meaningful outcomes, online collaborations, etc. BrainJams are a step in the right direction.

Posted by LaBlogga at 5:21 AM 0 comments

Tuesday, October 11, 2005

Physical entities must move to digital worlds

Should physical world entities such as each individual, company, club, interest group, celebrity, band, etc. have a digital environment presence?

Should physical world entities such as each individual, company, club, interest group, celebrity, band, etc. have a digital environment presence?

One fear is a garish re-creation of the physical world in the same or maybe a worse form in the digital environment. Hm, wait, isn't this most of what Second Life is currently? The metaverse must include mainstream non-techy culture in order to ramp. The Internet 1.0 evolved slowly from deep tech to technorati to tech savvy to mainstream. With Internet 2.0, the gap will shrink rapidly, some corporates like Wells Fargo already have a 'metaverse' presence in Second Life and authors have included an online reading and signing event on their book tours.

Although it would be interesting to see how digital environments would evolve on their own with growing groups of the tech savvy signing in, moving the mass market to the digital environment with haste will probably create a more interesting digital world more quickly. There will always be plenty of digital environment pockets for deep tech to ideate and instantiate. For example, Second Life could be the mainstream platform while deep tech moves to other metaverse worlds with radically different parameters.

As mentioned in the previous post, each physical world entity should be considering and creating its expanded-functionality digital environment presence. More interestingly, we should also be thinking about new digital world presences. For example, a book could have a digital environment presence, initially mediated by the author and readers. An idea could have a presence.

Posted by LaBlogga at 1:39 AM 0 comments

Monday, October 10, 2005

Expression in a digital environment

What is so great about a digital environment compared to the physical world?

What is so great about a digital environment compared to the physical world?

There are currently two main kinds of experience to have in a digital environment such as Second Life, Project Entropia and Sims Online: avatar representation and interactive activities.

If the digital world is avatar-based as the current models seem to be, you create a visual representation of yourself or your presence in the form of a person, animal or object, which is a chance to explore and experiment with identity. You can be someone or something else. Shape, size, gender, age, color, texture and other attributes are all an exploration. Another property of digital identity is dynamism, you can change your avatar'’s appearance at any time.

Doing activities is the second main experience in digital worlds, including social chat, dancing, gambling, designing and building objects, discussion and learning.

The list of potential activities is much longer. There could be a digital world version of any physical world activity; best represented as an adaptation and abstraction with fun new elements, not just a re-creation of the physical world activity. The ability for any group to meet and interact in a richer synchronous way than with current Internet-based methods of chats, email lists and websites is just starting to be imagined.

Each physical world entity (e.g.; business, university, hobby group, book club) should be thinking about what its digital environment presence should be, where it should be (e.g.; in the existing digital worlds of Second Life, etc. or in its own digital world) and starting to get it created. For businesses and services, there are two audiences/customer sets, the existing customer set that can be brought into the digital environment and the customer set that is in the digital environment already.